- #Excel text import wizard file origin update#

- #Excel text import wizard file origin full#

- #Excel text import wizard file origin code#

- #Excel text import wizard file origin download#

This is awesome, as we now have the ability to clean up our data and use it as we were hoping to do from the beginning! So let’s do just that…starting with killing off that first line that’s been screwing us up:

#Excel text import wizard file origin update#

Update the file path in the “Source” step to the path to your file.Īnd the output is remarkably different… in fact, it’s the entire contents of the file!. Source = fnRawFileContents("D:\Test\MalformedCSV.csv")

Value = Table.FromList(Lines.FromBinary(File.Contents(fullpath)),Splitter.SplitByNothing()) * Get the raw line by line contents of the file, preventing PQ from interpreting it */įnRawFileContents = (fullpath as text) as table =>

Go to Power Query –> From Other Sources –> Blank Query. #Excel text import wizard file origin code#

A little bit of a code template, which is conveniently included below.On my system it is "D:\Test\MalformedCSV.csv"

#Excel text import wizard file origin full#

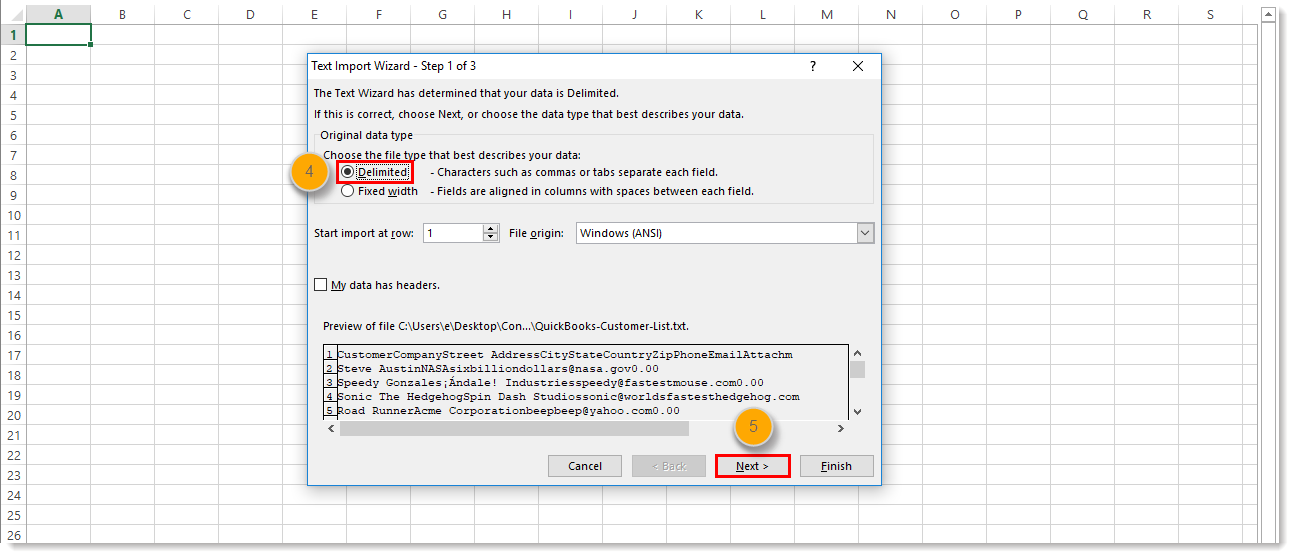

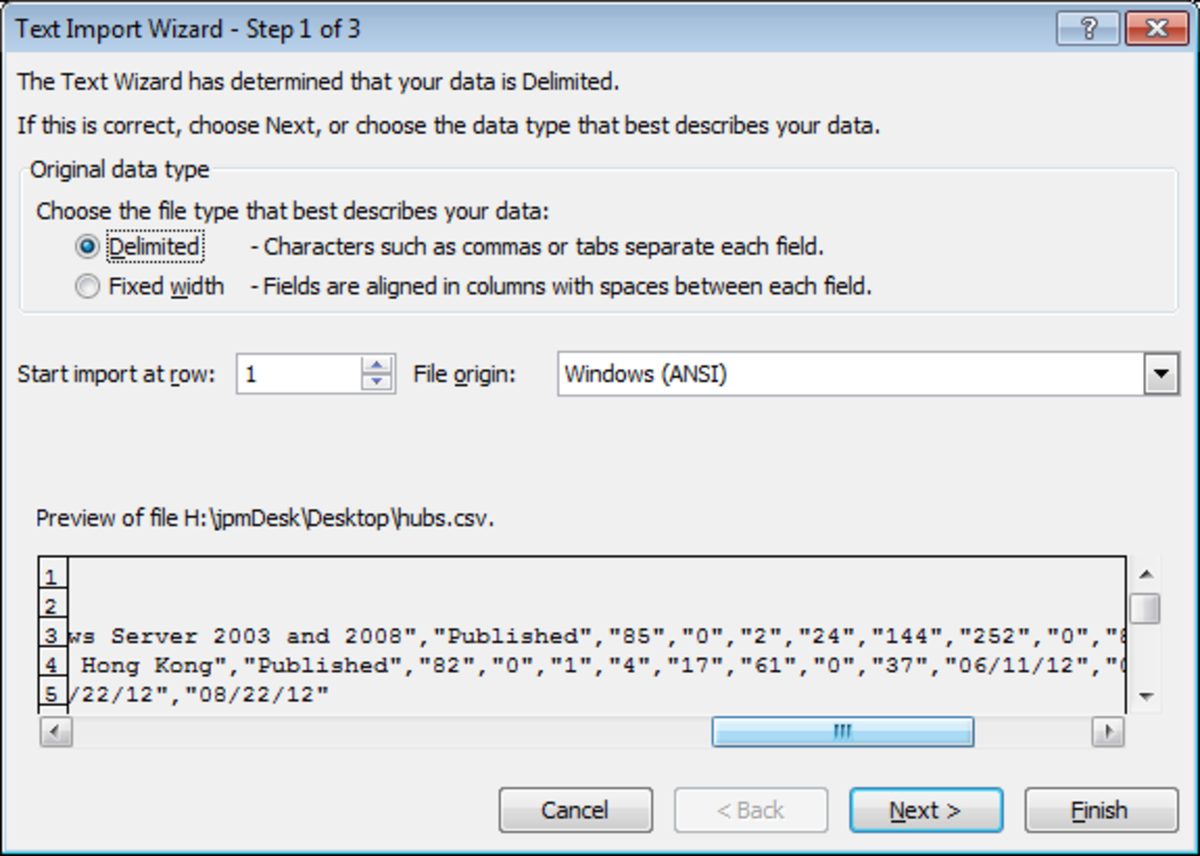

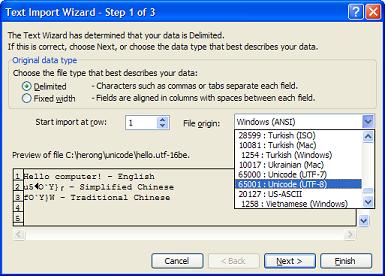

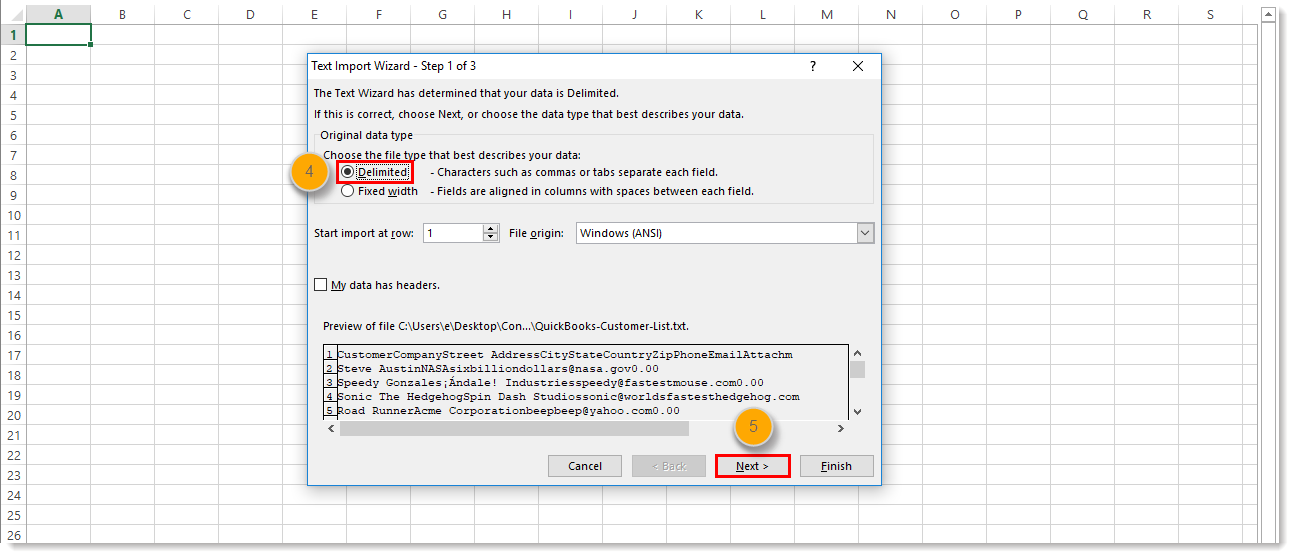

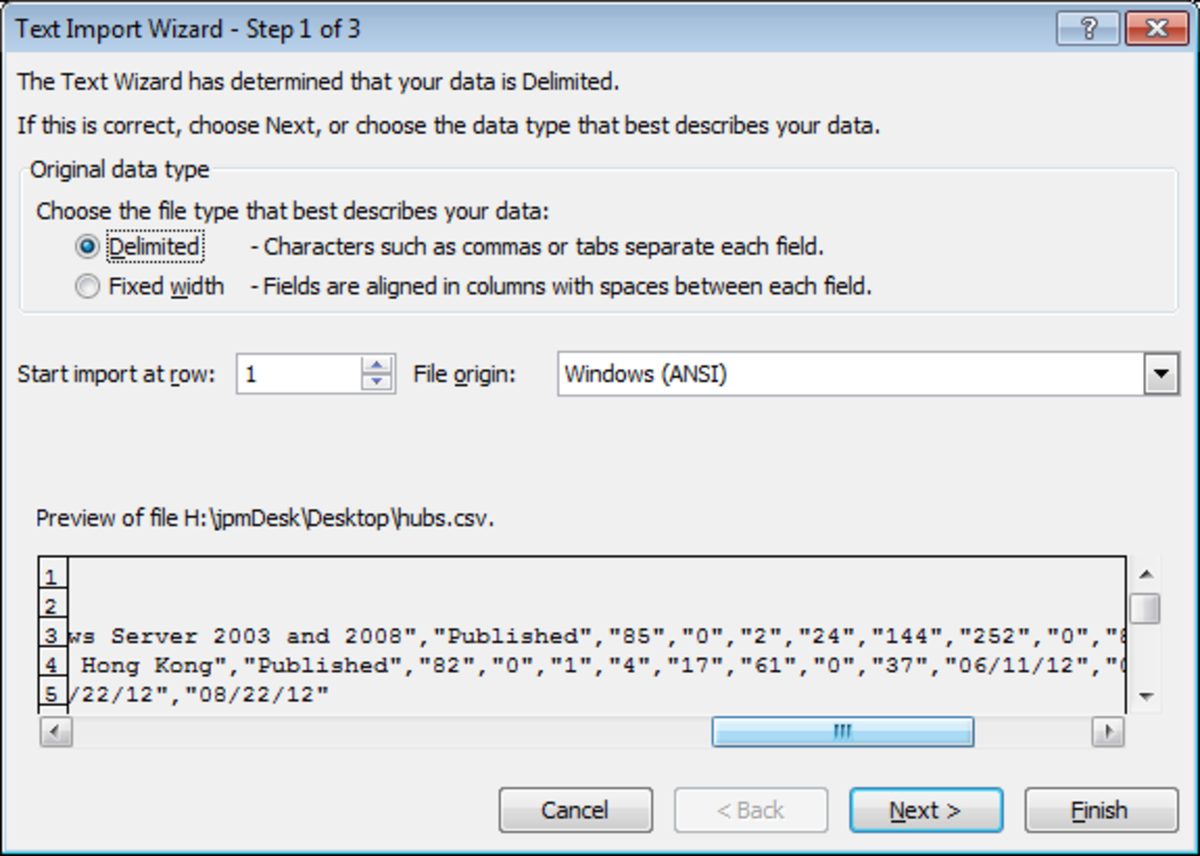

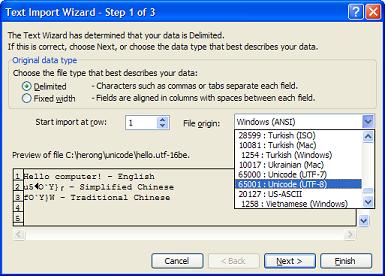

The full file path to the file you want to import (including the file extension). Let’s try this again, this time from scratch. Grr… Force Power Query to Import as a Text File It looks at the file and says “Hey! That’s not a text file, it’s a CSV file!” and then imports it as a CSV file. Click the file filter list in the bottom right and change “Text File (*.txt *.prn)” to “All Files (*.*)”ĭamn. Ah… we’re filtered to only show *.txt and *.prn files… Browse to the folder that holds MalformedCSV.csv. If we can’t rely on the file format being correct, why don’t we just import it as a text file? That would allow us to bring all the rows in, remove the first one, then split by commas. But our second row has three commas, which means three columns… That’s not the same number of columns as our header row, so Power Query freaks out and throws an error for every subsequent record.

Power Query sets us up for a one column table. We therefore must have a one column table.

Our first row has no commas before the line feed. (That’s why you’ll see records in some CSV’s that read, – because there isn’t anything for that record, but we still need the same number of commas to denote the columns.Īnd now some joker builds us a file masquerading as a CSV that really isn’t. And we also know that every CSV file has a consistent number of columns (and therefore commas) on every row. Every comma indicates a column break, every carriage return a new line. If I click the white space beside one of those errors, I get this: Let’s try importing the sample file into Power Query: Well yay, so what, right? Who cares about the guts of a CSV file? The answer is “you” if you ever get one that is built like this… Yet when you look at the data in the rows below, they are plainly separated by commas. Notice the first row… that’s where our issue is. If you open the sample file in Notepad, you’ll see that it contains the following rows:

#Excel text import wizard file origin download#

If you’d like to follow along, you can click here to download MalformedCSV.csv (the sample file). It is NOT A GOOD IDEA.) The Issue in the Real World Awesome… until some bright spark decides to inject a line or two of information above the CSV contents which doesn’t contain any commas. CSV files are a prime example of this, and we should be able to assume that any CSV file will contain a list of Comma Separated Values, one record per line, followed by a new line character. IT standards are generally a beautiful thing, especially in programming, as you can rely on them, knowing that certain rules will always be followed. The problem is that sometimes this doesn’t work as expected, and you need to be able to force Power Query to import as a text file, not the file format that Power Query assumes you have. Power Query takes certain liberties when importing a file, assuming it knows what type of file it is.

/Import_Wizard_Data_Columns_Page_ASCII.png)

I’ve run into this issue in the past, and also got an email about this issue this past week as well, so I figured it’s worth taking a look.

/Import_Wizard_Data_Columns_Page_ASCII.png)

0 kommentar(er)

0 kommentar(er)